Like many of you I’m sure, one of my sites took a little hit from Google’s June Core Web Vitals search ranking update, and I’ve been spending a lot of time digging into these number in an attempt to find a shortcut or two that might help get my metrics up and recover a few search positions.

Checking Your Core Web Vitals

A great tool for measuring Core Web Vitals statistics is the Google PageSpeed Insights test page located here…

https://developers.google.com/speed/pagespeed/insights/

…which allows you to submit a URL and then let Google tell you what it thinks of the page. There are another of other tools around that use the LightHouse APIs and try to measure these metrics, but I’m assuming this is likely the closest approximation to what Google uses for actual ranking results.

Weird Things to Know About the PageSpeed Insights Checker

There are a few things to note about using this particular tool.

First, take note that the page reports different data for mobile and desktop analysis. The mobile analysis is probably what you care about since Google announced mobile first ranking, even for desktop search results. For the “Lab Data” section of the mobile results, the measurements look truly dreadful in most cases, but if you read the fine print Google is performing the mobile analysis as though you’re on an ancient Android phone accessing the internet from a 3G network in central Timbuktu. So for practical purposes, these measurements should be treated as relative measurements and not absolute reflections of what a user on a more up-to-date device on WiFi or a modern cellular network.

Second, it reports a lot of results that don’t really have anything to do with the test you run immediately ran. In particularly, the top part of the results page shows “Field Data” which is telemetry collected from real-world Chrome users. You won’t see these values change from test to test because it’s on-going data collection from a 28 day window of live visitors. If you’re tuning your site, you won’t see these numbers change for several days at a minimum, and even then only if you’ve made a substantial tweak.

Finally, the “Lab Data” section, which is the actual data from the test you just ran, will vary by quite a bit from run to run. If you change something and want to really measure the difference, you need to run this test multiple times and analyze the aggregate results.

JavaScript weighs in on many of the dimensions of the Core Web Vitals statistics, and in looking into the detailed breakdown of the diagnostics, I noticed the CloudFlare’s Rocket Loader script was one of the heavyweights that PageSpeed Insights said was contributing negatively to multiple statistics.

What is CloudFlare’s Rocket Loader?

If you’ve been around the site here for long, you know I’m a big fan of CloudFlare, so much so that I actually starting buying some of their stock (and for full disclosure, other than as a very small share holder, I have no financial relationship with them.)

Their Rocket Loader optimization is available as part of their free tier and attempts to optimize the way JavaScript files are delivered to site visitors. For detailed description of what Rocket Loader does, this link from CloudFlare’s blog probably describes it best…

https://blog.cloudflare.com/too-old-to-rocket-load-too-young-to-die/

The TL/DR version is that when CloudFlare caches your site content, it figures out which JavaScripts are on the page and then bundles them up into larger requests so that they can be more efficiently cached and delivered to the browser. This optimizes a lot of issues related to asynchronous loading, scripts that interact with the DOM and more, but in general Rocket Loader is supposed to help with things like Core Web Vitals.

Rocket Loader versus Core Web Vitals Deathmatch!

To put Rocket Loader to the test, I ran multiple tests against the multiplication worksheets page on DadsWorksheets.com, which is one of the higher volume pages on the site and one where I’ve been doing most of my Core Web Vitals optimization work.

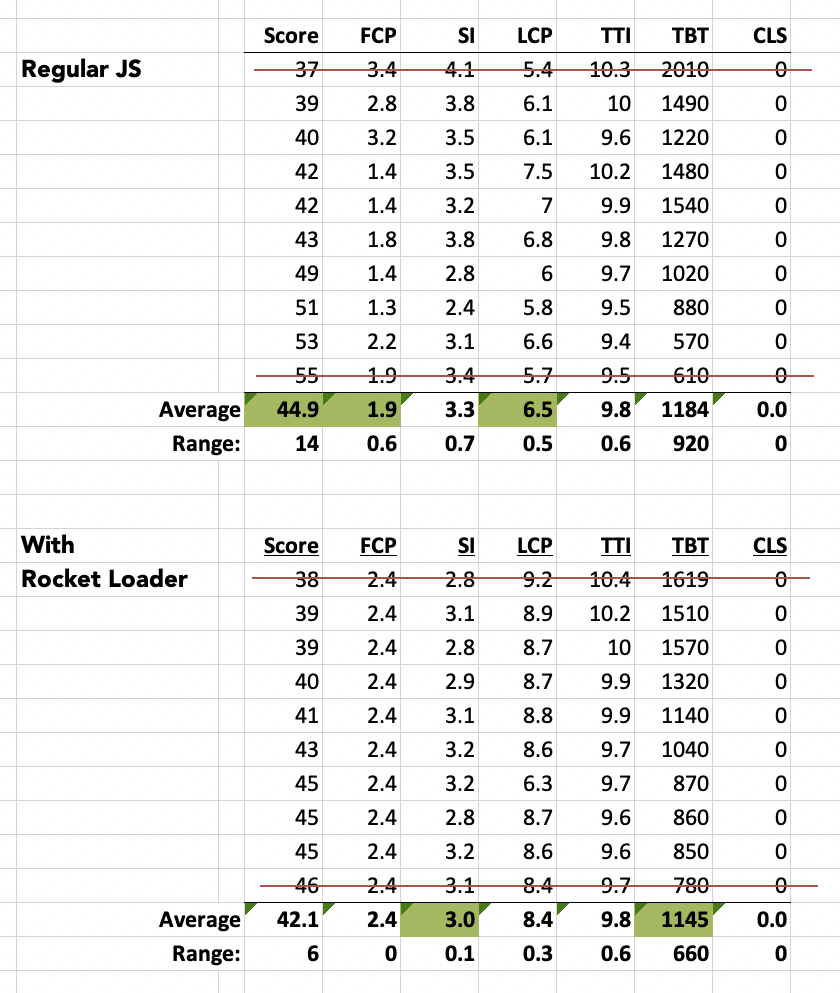

I ran the test ten times each with Rocket Loader enabled and just with regular JavaScript, threw away the best and worst score from each group and then averaged the results. The data looks like this…

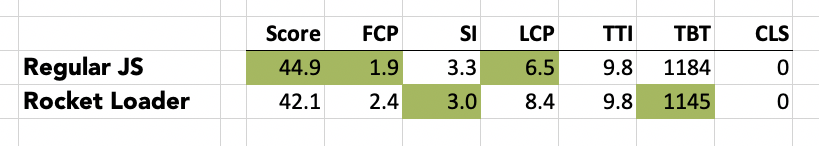

To make that just a little easier to compare, here’s just the average rows…

Counter intuitively, the PageSpeed Insights score with Rocket Loader enabled seemed to be close to 3 points worse than running regular JavaScripts on the site. But as usual with Google, the story is a little more complicated.

An interesting point in Rocket Loader’s favor comes from taking note of the ranges returned in the test numbers… Rocket Loader seemed to substantially reduce the variability in the metrics compared to regular JavaScript. This definitely tells me Rocket Loader is doing something (arguably something positive) in terms of the way the site behaves.

What seems to throw the score metric off is primarily First Content Paint (FCP) and Largest Contentful Paint (LCP). Both of these numbers are meaningfully higher. And given that version 8 of the Lighthouse score metric assigns a whopping 25% weighting to LCP, it’s pretty obvious that’s the culprit. But why?

What the Heck is Going On?

I’m still guessing at what this data is telling me, but I have a pretty good suspicion about what’s going on. The site presently is statically generated by Nuxt.js, which loads a ton of small (and not so small… manifest.js? Seriously guys?) JavaScript files on each page.

I suspect Rocket Loader is bundling up and loading all of these files in one big gulp, which is great from the consistency point of view, but apparently not so great in that this load and rehydrating is getting in front of the render pipeline with the largest paint element in it.

What that means is my case here may be slightly pathologically and atypical, but it’s definitely one of those reasons why you don’t just blindly assume flipping a switch on a dashboard is a shortcut to great performance.

So What Am I Doing Next?

My hope is I can untangle those and make sure the largest (and first) contentful paint are handled before the JavaScript needs to execute, in which case both the FCP and LCP numbers should come down, leaving the small SI and TBT advantages Rocket Loader contributed.

LCP continues to be a struggle for me for some reason, not the least because the static build process with Nuxt is unbelievably slow on a site with over 10,000 pages. I have some hopes Nuxt 3 will be better, but I’ve got on my to-do list to look at Vite and vite-ssg+vite-plugin-md+vite-plugin-pages in the near future, but in the short term I’m siloed. If you have experience with that Vite stack, let me know if the comments below how it went.

I think the end game is to leave Rocket Loader enabled and struggle through optimizing my LCP issues away. I’ll follow up with another post if I make any interesting progress, but if I don’t, the numbers don’t lie… And I may wind up switching Rocket Loader off until I’m on a less complicated static deployment.