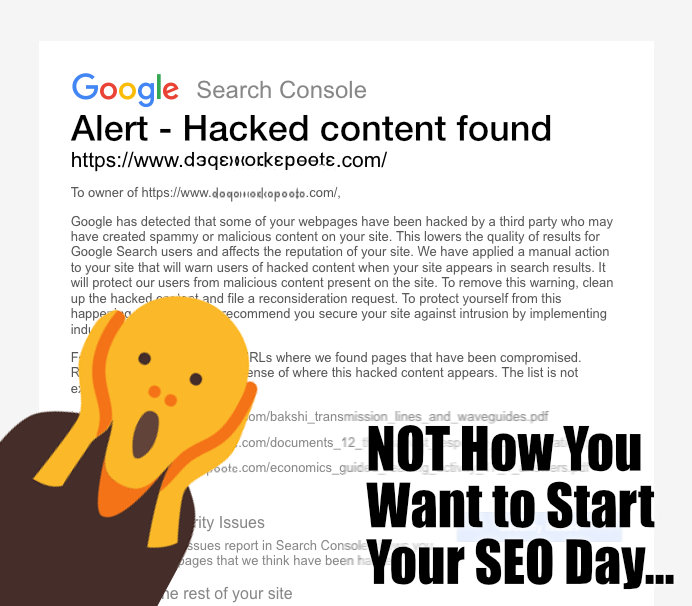

When your livelihood is tied to website traffic, one of the worst things you can wake up to is an email from Google Search Console.

I’m no stranger to bad news from the Big G and the non-communication and horror movie circular forward-you-to-the-right-group conversations that go along with any dialog you might think would rectify the problem. I spent six months trying to get my site off the AdExchange blacklist because of a minor AdWords violation on an account I didn’t even know was still serving $10 per month worth of ads. Which sounds insane, I know, because my ancient AdWords account should have nothing at all to do with my display partner’s Ad Exchange account, but believe me… What a mess. In that case, I was fortunate that a friend-of-a-friend-of-a-friend had a direct Google rep with a back-door into some super-secret re-evaluation queue not visible to mere mortals.

But my real talent seems to be using DNS records to try to shoot myself in the hard drive. A couple of years back I managed to take my math worksheets site offline with a DNS record change that I thought was working fine because of a forgotten localhost entry that resolved to the right address. When I saw a huge traffic drop in Google Analytics the next day, I immediately knew I’d messed up, but that brief span of time offline wiped out almost six months worth of SEO ranking progress. I’m a huge fan of Uptime Robot now.

Subdomain DNS Records are Dangerous

So you can bet, I’ve become pretty dang careful with DNS records pointed to my primary site. But, I’m also a developer.

There’s that old adage, “Real Developers Test It In Production,” something you should not ascribe to, so naturally I sandbox development and staging servers on subdomains. And of course, a subdomain means a DNS A/AAAA record that needs your full attention. And that, friends, is the beginning of the reason why I got another Google Search Console email a couple of days ago and why I’m doing something different with my dev servers going forward.

The obvious scary thing in the email’s subject line was the words “Hacked Content” and then, if that wasn’t enough to make every hair stand on end, the body text shouted “manual penalty” with a handy link right to the page on Google Search Console, which provided a big fat confirmation of every bit of bad news. Great.

After I calmed down a bit, I settled in to see what was going on. Google helpfully provided links to some of the pages that it claimed were hacked, and none of the URLs looked right at all. None were coming from the main www subdomain, which immediately lowered my heart rate, but even the URLs to the development subdomain they all referenced looked really weird.

And then, it downed on me, that development subdomain wasn’t even around any more. I had decommissioned the server it was running under months ago, so that content couldn’t even be coming from a machine I was using. That server was gone, but its IP address was still resolving. And when I’d surrendered that IP address back to Linode, it meant that basically anybody else could start using a new server with that IP for their own purposes. So when someone else spun up a new site, it became reachable via a subdomain I still had defined. DNS induced brain damage, part two it seemed.

So in this case, there wasn’t any “hacked content” anywhere, it was just that my DNS made it look as though I was serving duplicate content from some random site out from under a subdomain I controlled. And while the manual penalty suggested it was only relevant to URL patterns that matched that subdomain, it was also pretty specific that the manual penalty affected the overall reputation and authority of everything under the domain, so fixing it right away was a priority.

The obvious and easy solution was just to delete the DNS record pointing to that subdomain, wait for propagation and then file a reconsideration request through Search Console. Even though the reconsideration request indicated that Google took “several weeks” to review anything, I did thankfully get a follow up email in roughly 36 hours that said the penalty had been removed.

I’m not sure if I took a short term traffic hit or not on the main domain as all of these events transpired over a weekend, and the traffic pattern for this site drops off significantly there and around the holidays in general. Otherwise the site traffic looks normal in spite of the brief stint with the manual penalty in place. So far, it looks like I dodged whatever bullet might have been headed my way. I think an important contributor to this rapid turnaround and preserving rankings was that I fixed the issue rapidly and explained in clear detail in the reconsideration request what happened and how I resolved it, and specifically that it wasn’t actually an actual malicious hack.

But the key take away is not just to be super careful managing your DNS entries, but also to run any publicly visible development and test boxes under a domain that has nothing to do with your main property.

If this had been an actual hack of a machine we were using for something critical, and maybe one that appeared more malicious than serving duplicate content, that manual penalty could have had a real negative financial consequence for the main site. It’s hard enough to secure a production server, but a development machine that is transient in nature is probably going to be less secure, and potentially a softer attack vector.

SEO is hard work, and shooting yourself in the DNS is pretty easy. If a hack, or even just a DNS misconfiguration, of a dev machine can lead to a manual penalty that affects not just the subdomain, but your entire web property, it’s much wiser to have it far away from your main domain. In the future, I’ll be running any publicly visible dev machines under an entirely different domain name for this reason.